5 Best AI Sentiment Analysis Tools For AI Search Monitoring

Written by

Ernest Bogore

CEO

Reviewed by

Ibrahim Litinine

Content Marketing Expert

The sentiment AI engines absorb today becomes the narrative they deliver about your brand tomorrow. The advantage goes to teams who detect those shifts before they show up in traffic or revenue.

The real challenge is spotting which sentiment signals matter. A subtle tone change in key prompts, a new citation model engines start trusting, or a competitor rising in answer sentiment can reshape how LLMs position your brand. You feel the downstream impact, but without the right tools you can’t see the cause early enough to act.

We reviewed the 5 Best AI Sentiment Analysis Tools for AI Search Monitoring with a focus on that problem — choosing platforms that expose prompt-level tone, source influence, competitive movement, and sentiment patterns tied to meaningful outcomes.

This short breakdown shows which tools give you clear, decision-ready insight into why sentiment shifts inside AI answers and which one fits the depth your workflow requires.

Table of Contents

TL;DR

|

Tool |

Best For |

AI Sentiment Strengths |

AI Search Monitoring Superpower |

Ideal Teams |

|

Analyze AI |

Connecting AI visibility to real traffic, conversions, and ROI |

Prompt-level sentiment tied to behavior and revenue |

Shows which AI engines send sessions, which prompts convert, and which drive ROI |

Growth, marketing, RevOps, agencies |

|

Brandwatch |

Global brand tracking and broad sentiment intelligence |

Deep multilingual sentiment and emotion detection |

Mirrors large-scale web text that influences AI model outputs |

Enterprise brands, PR, research teams |

|

Talkwalker |

Detecting nuance, sarcasm, and early crisis signals |

Emotion-rich models with sarcasm and tone recognition |

Surfaces early narrative shifts before they appear in AI-generated answers |

Comms, crisis teams, insights teams |

|

Sprout Social |

Social-first, campaign-linked sentiment monitoring |

Short-form nuance: emojis, tone, conversational language |

Shows how social mood changes as campaigns evolve, shaping perception in AI apps |

Social and comms teams, mid-size marketing teams |

|

MonkeyLearn |

Custom sentiment for your own text streams |

Trainable, domain-specific models for high accuracy |

Labels AI outputs, prompts, tickets, and feedback with custom categories |

Product, CX, support, data-driven orgs |

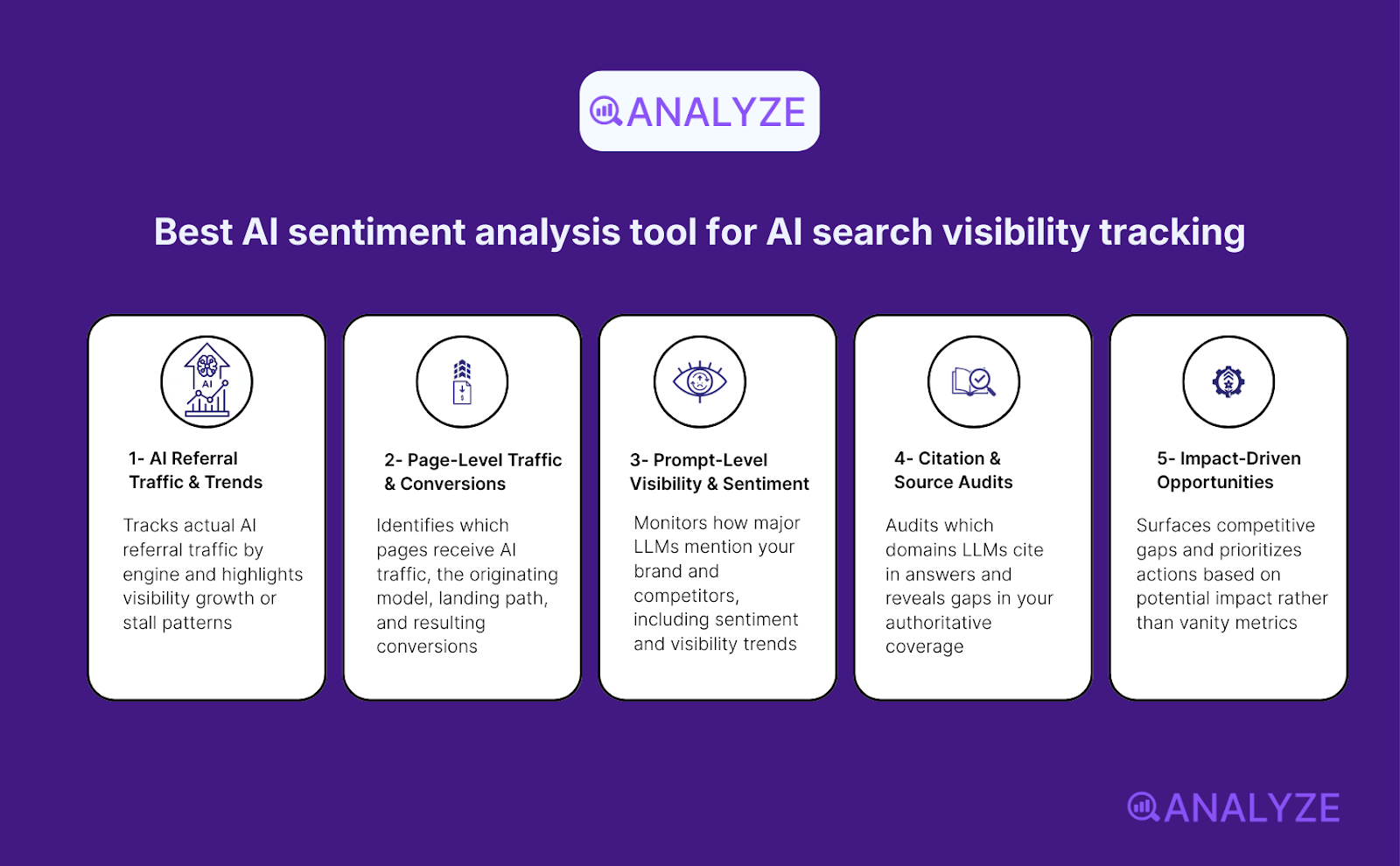

Analyze AI: best AI sentiment analysis tool for AI search visibility tracking

Most GEO tools tell you whether your brand appeared in a ChatGPT response. Then they stop. You get a visibility score, maybe a sentiment score, but no connection to what happened next. Did anyone click? Did they convert? Was it worth the effort?

These tools treat a brand mention in Perplexity the same as a citation in Claude, ignoring that one might drive qualified traffic while the other sends nothing.

Analyze AI connects AI visibility to actual business outcomes. The platform tracks which answer engines send sessions to your site (Discover), which pages those visitors land on, what actions they take, and how much revenue they influence (Monitor). You see prompt-level performance across ChatGPT, Perplexity, Claude, Copilot, and Gemini, but unlike visibility-only tools, you also see conversion rates, assisted revenue, and ROI by referrer.

Analyze AI helps you act on these insights to improve your AI traffic (Improve), all while keeping an eye on the entire market, tracking how your brand sentiment and positioning fluctuates over time (Govern).

Your team then stops guessing whether AI visibility matters and starts proving which engines deserve investment and which prompts drive pipeline.

Key Analyze AI features

-

See actual AI referral traffic by engine and track trends that reveal where visibility grows and where it stalls.

-

See the pages that receive that traffic with the originating model, the landing path, and the conversions those visits drive.

-

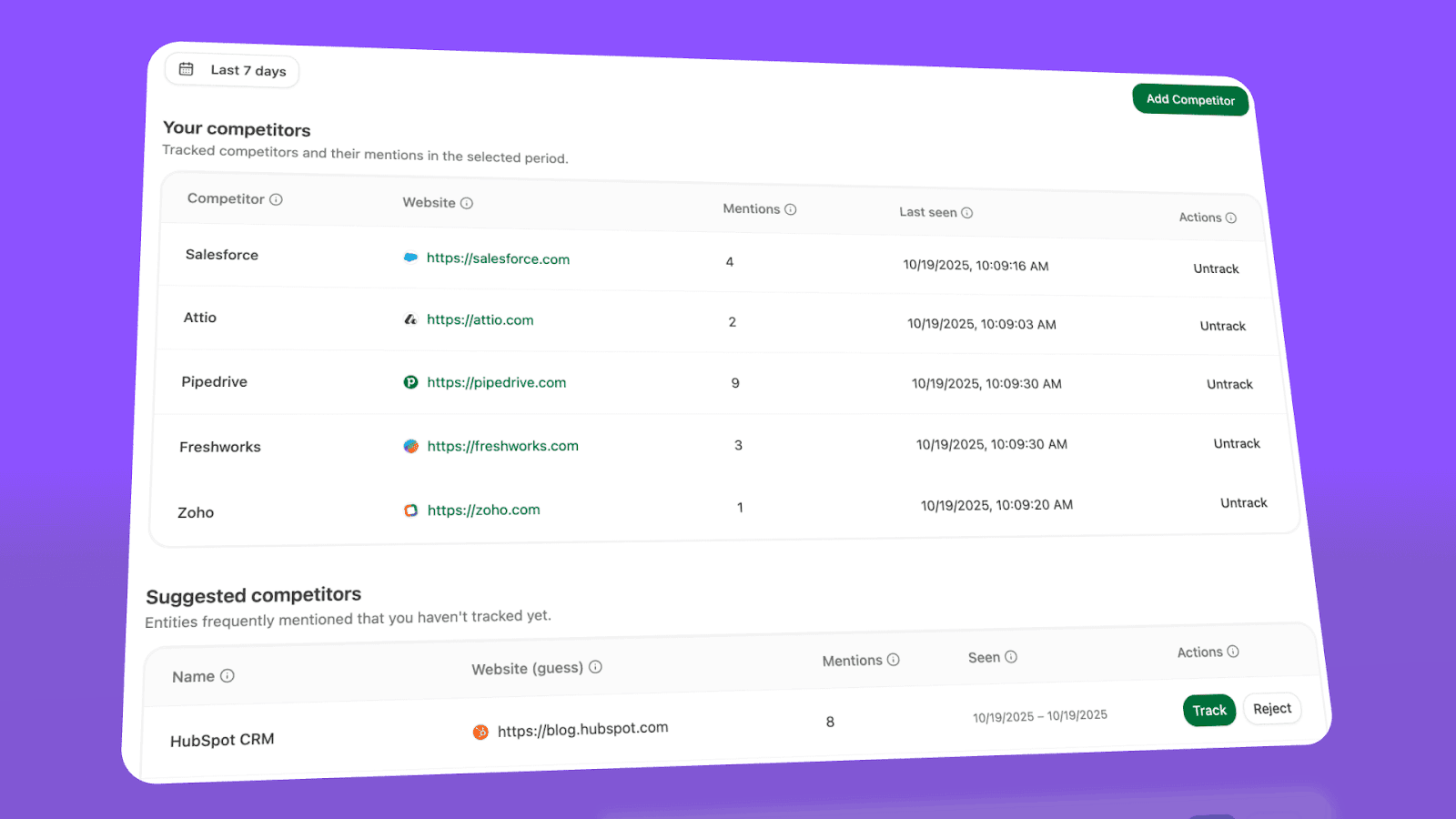

Track prompt-level visibility and sentiment across major LLMs to understand how models talk about your brand and competitors.

-

Audit model citations and sources to identify which domains shape answers and where your own coverage must improve.

-

Surface opportunities and competitive gaps that prioritize actions by potential impact, not vanity metrics.

Here are in more details how Analyze AI works:

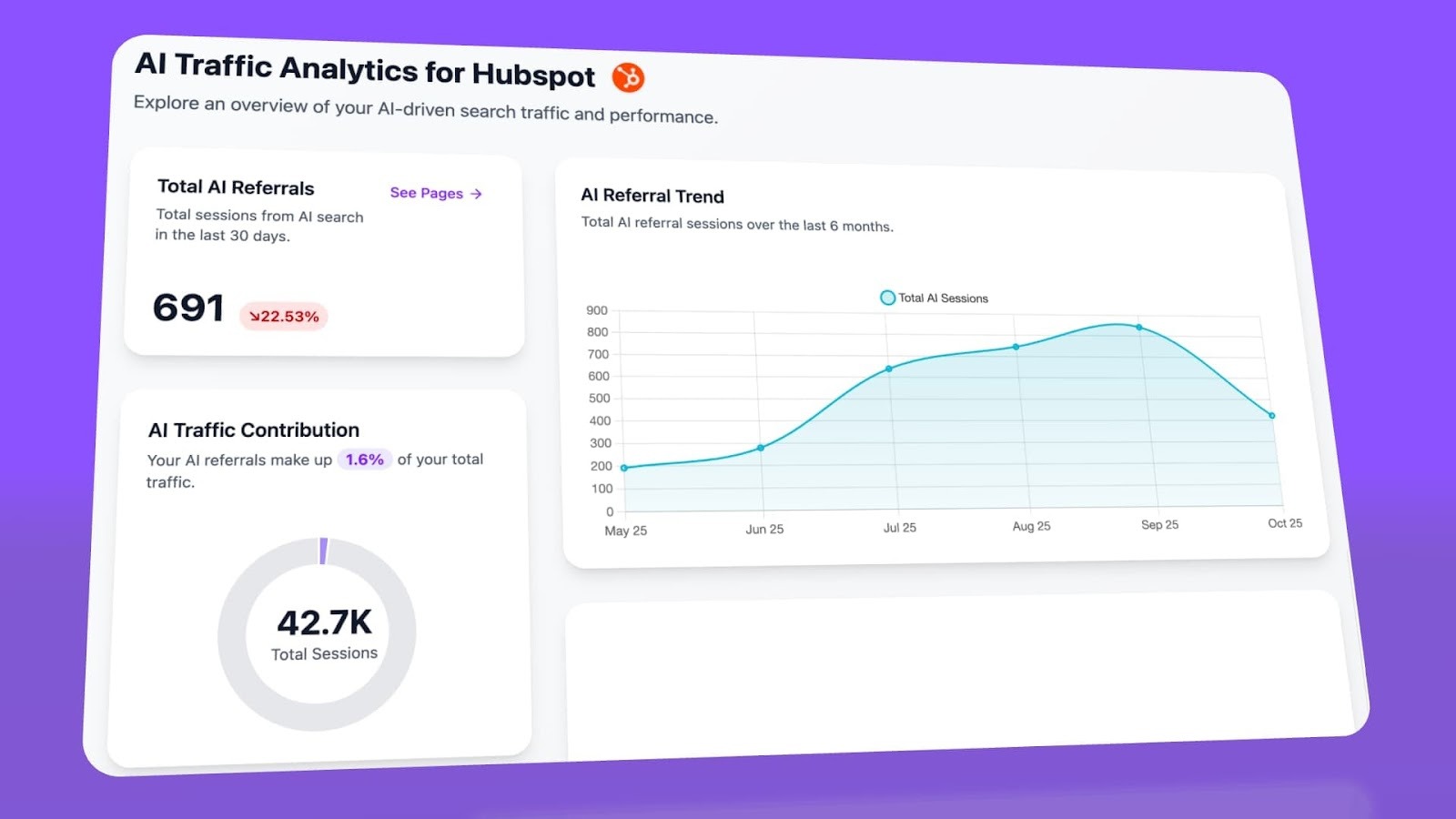

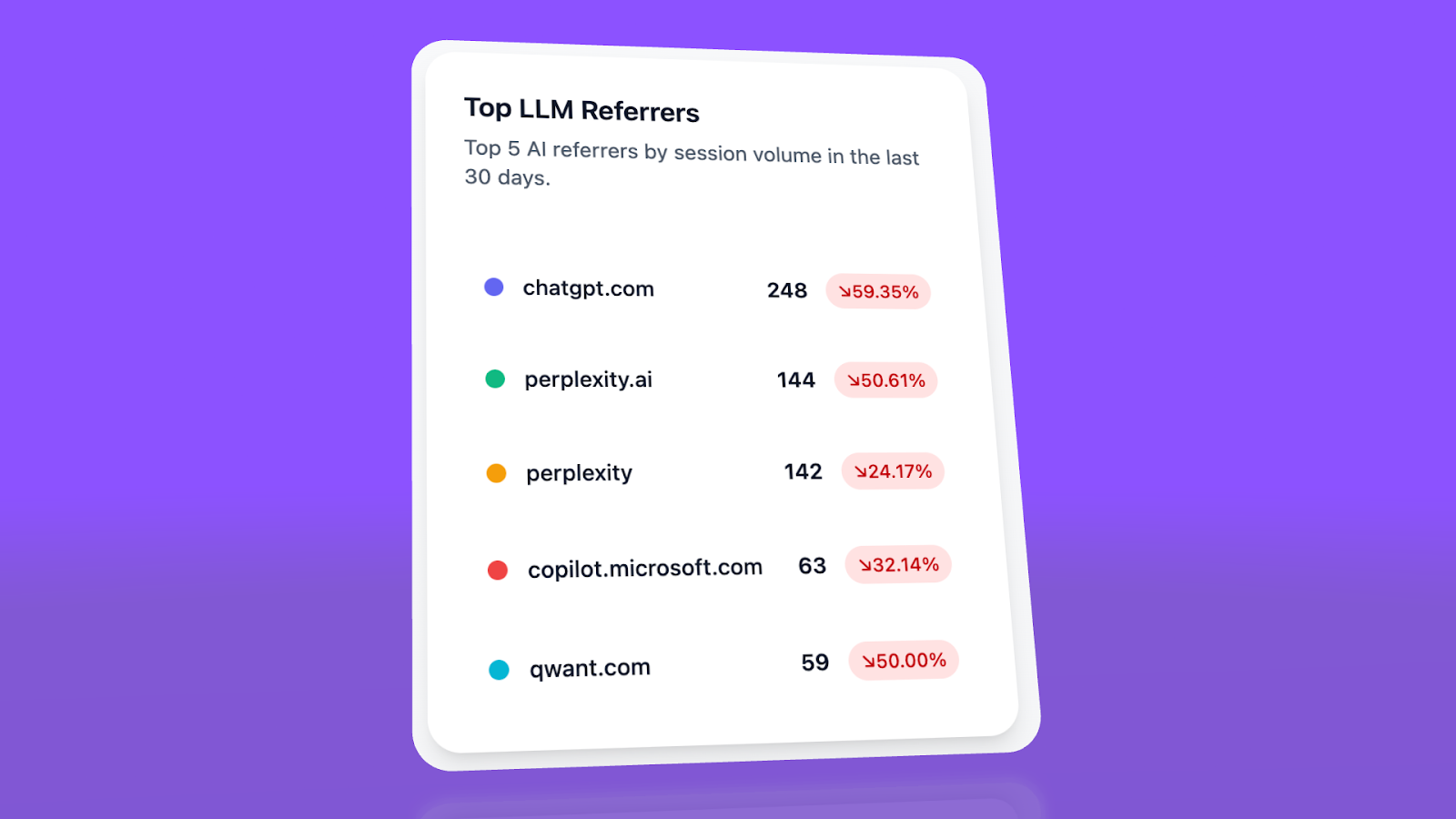

See actual traffic from AI engines, not just mentions

Analyze AI attributes every session from answer engines to its specific source—Perplexity, Claude, ChatGPT, Copilot, or Gemini. You see session volume by engine, trends over six months, and what percentage of your total traffic comes from AI referrers. When ChatGPT sends 248 sessions but Perplexity sends 142, you know exactly where to focus optimization work.

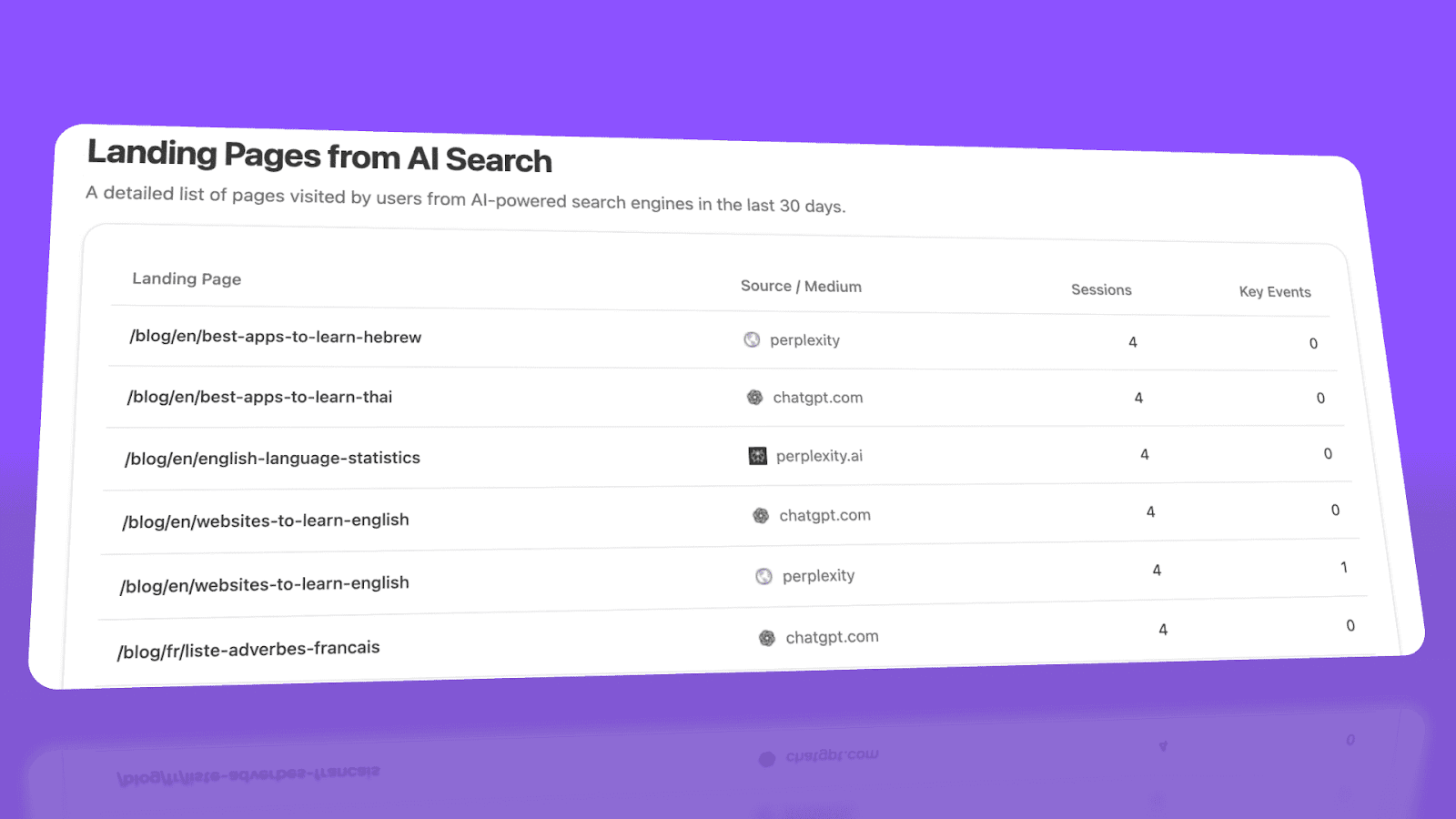

Know which pages convert AI traffic and optimize where revenue moves

Most tools stop at "your brand was mentioned." Analyze AI shows you the complete journey from AI answer to landing page to conversion, so you optimize pages that drive revenue instead of chasing visibility that goes nowhere.

The platform shows which landing pages receive AI referrals, which engine sent each session, and what conversion events those visits trigger.

For instance, when your product comparison page gets 50 sessions from Perplexity and converts 12% to trials, while an old blog post gets 40 sessions from ChatGPT with zero conversions, you know exactly what to strengthen and what to deprioritize.

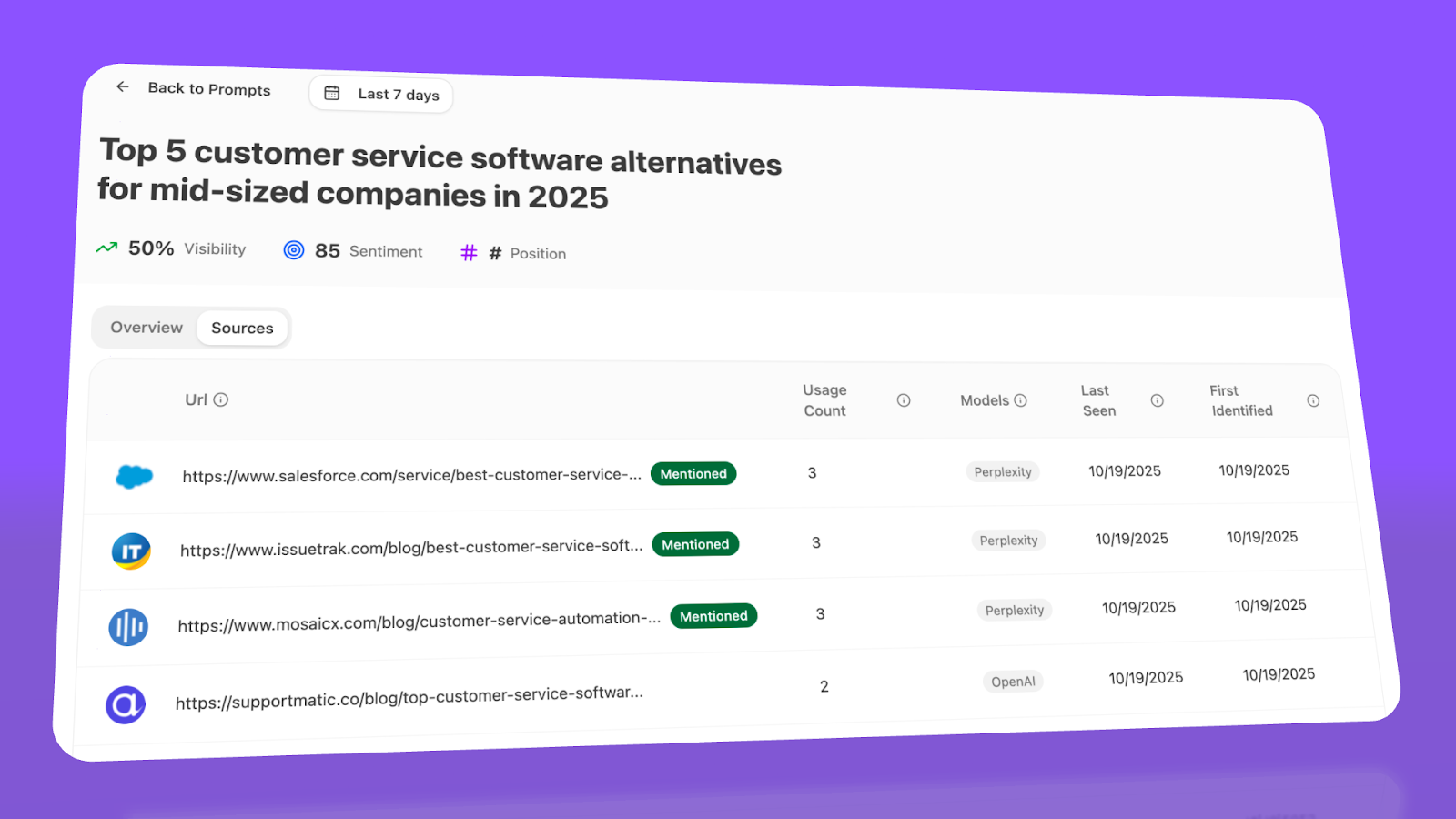

Track the exact prompts buyers use and see where you're winning or losing

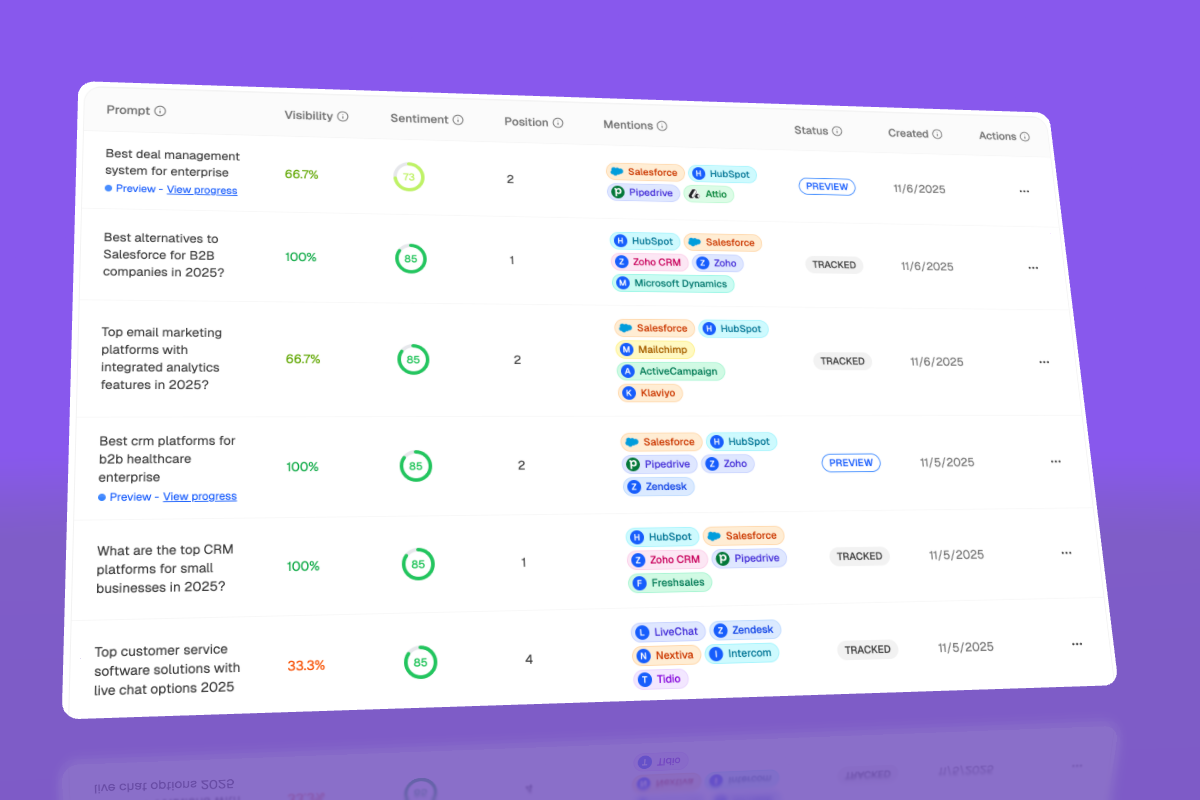

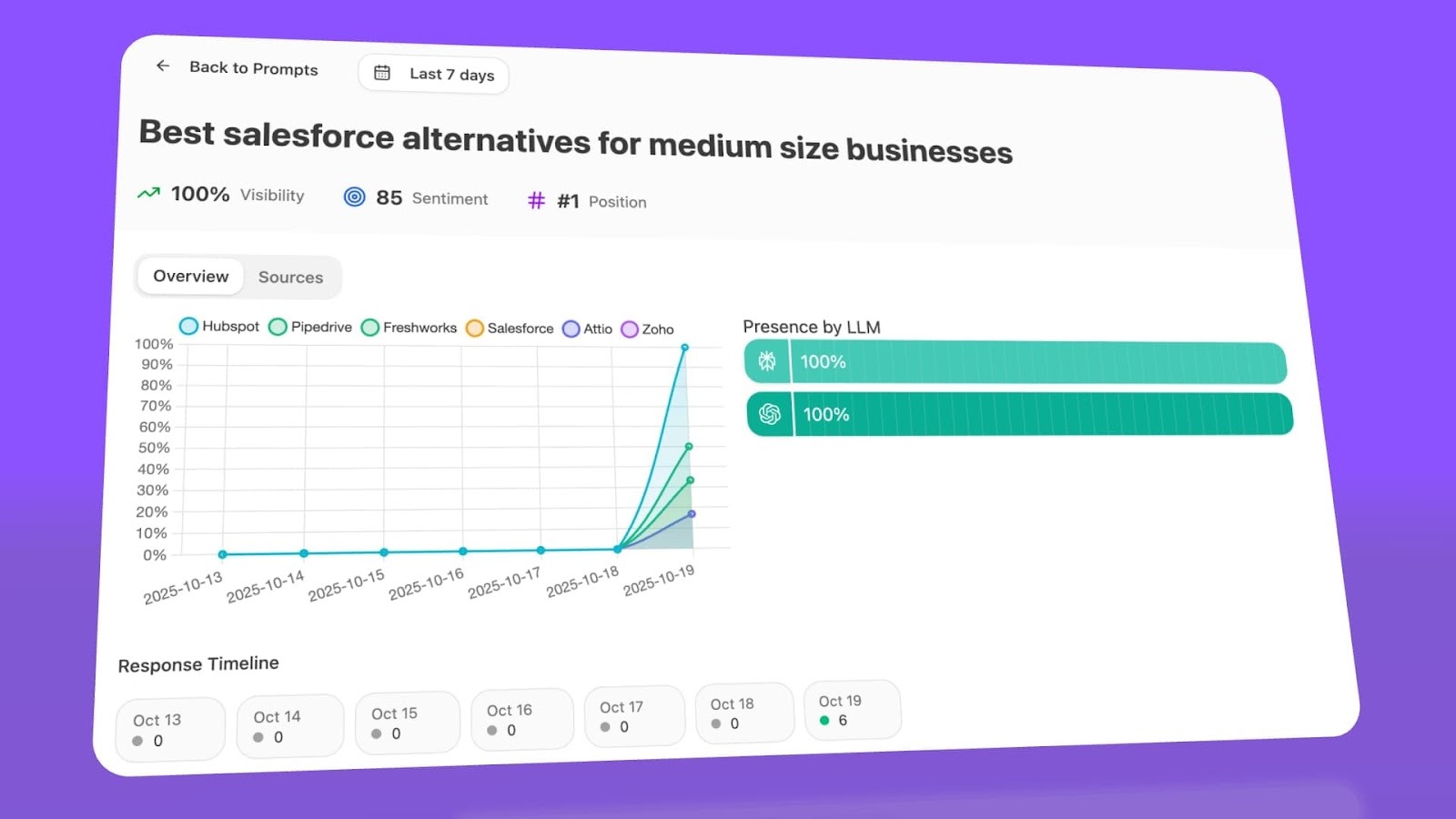

Analyze monitors specific prompts across all major LLMs—"best Salesforce alternatives for medium businesses," "top customer service software for mid-sized companies in 2026," "marketing automation tools for e-commerce sites."

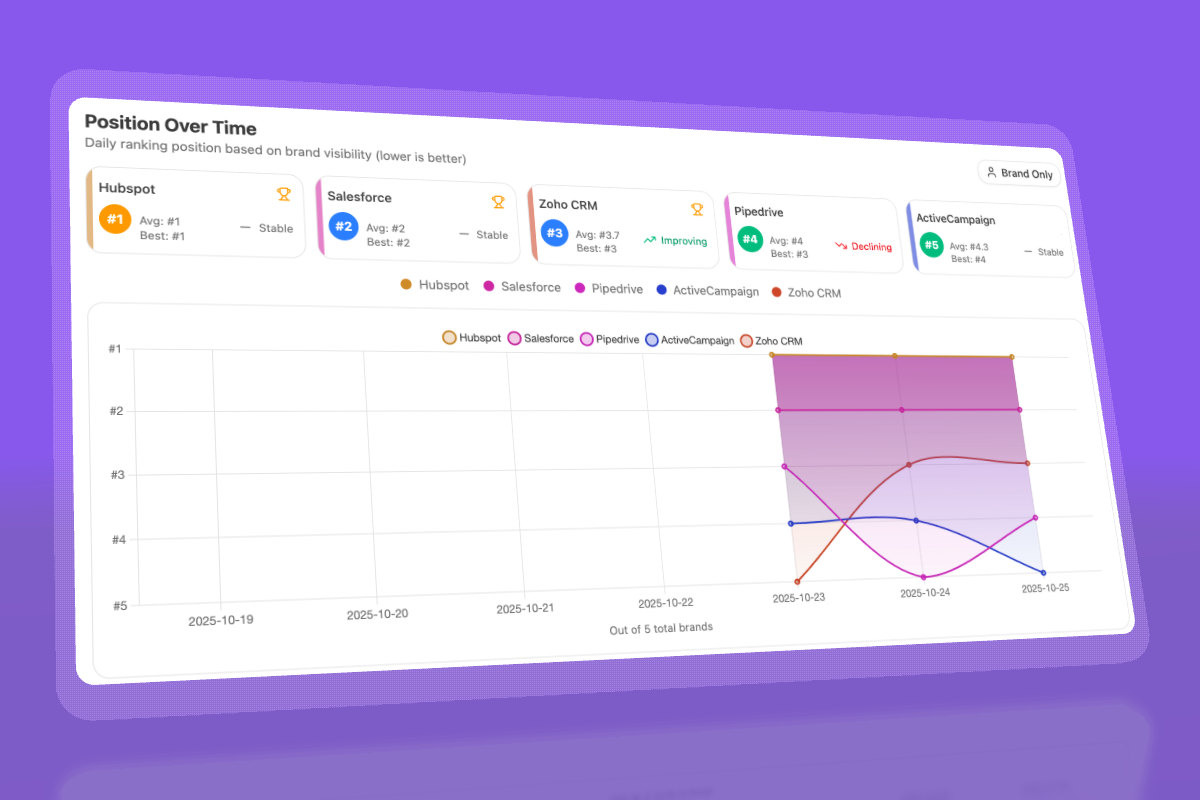

For each prompt, you see your brand's visibility percentage, position relative to competitors, and sentiment score.

You can also see which competitors appear alongside you, how your position changes daily, and whether sentiment is improving or declining.

Don’t know which prompts to track? No worries. Analyze AI has a prompt suggestion feature that suggests the actual bottom of the funnel prompts you should keep your eyes on.

Audit which sources models trust and build authority where it matters

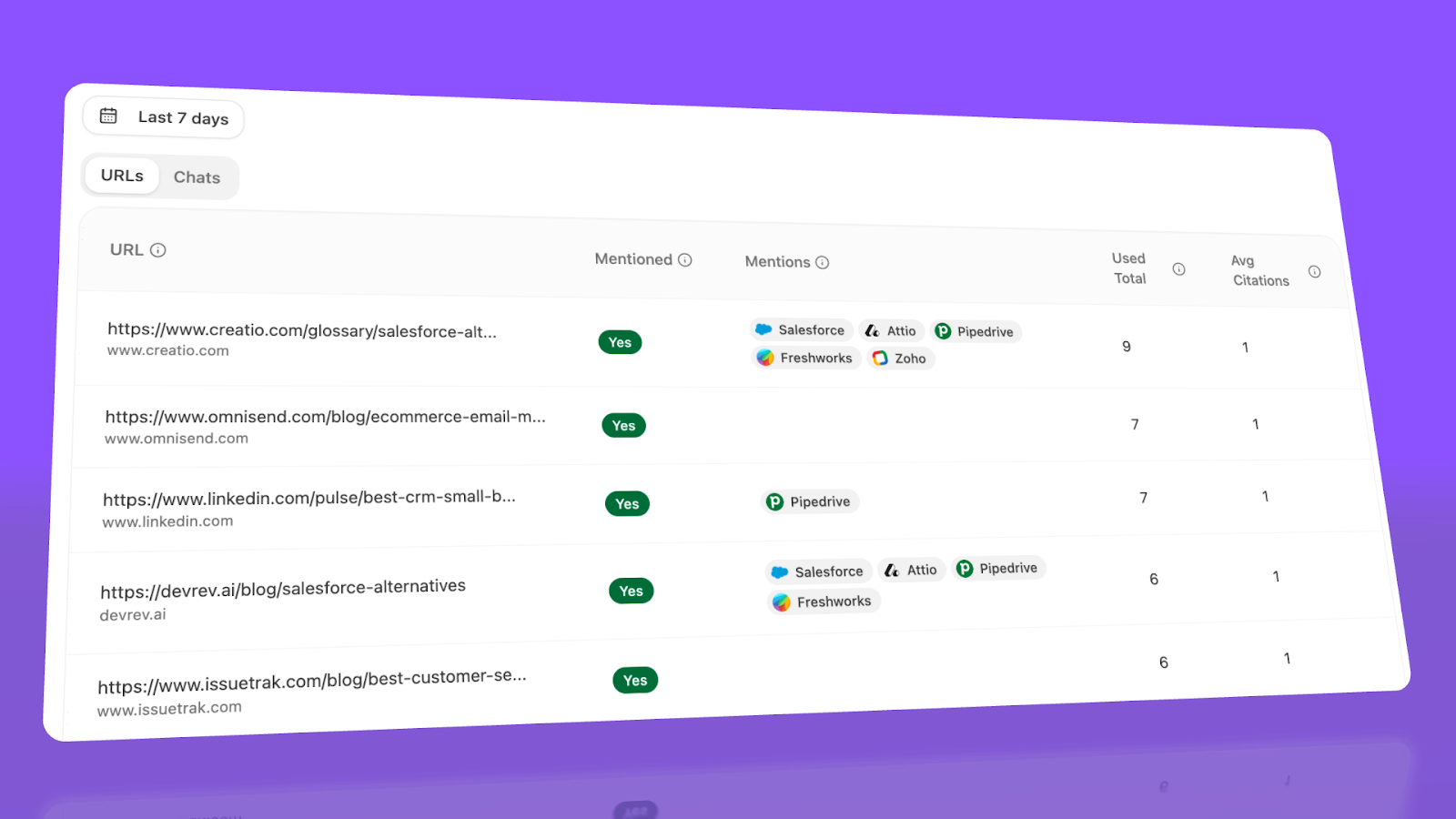

Analyze AI reveals exactly which domains and URLs models cite when answering questions in your category.

You can see, for instance, that Creatio gets mentioned because Salesforce.com's comparison pages rank consistently, or that IssueTrack appears because three specific review sites cite them repeatedly.

Analyze AI shows usage count per source, which models reference each domain, and when those citations first appeared.

Citation visibility matters because it shows you where to invest. Instead of generic link building, you target the specific sources that shape AI answers in your category. You strengthen relationships with domains that models already trust, create content that fills gaps in their coverage, and track whether your citation frequency increases after each initiative.

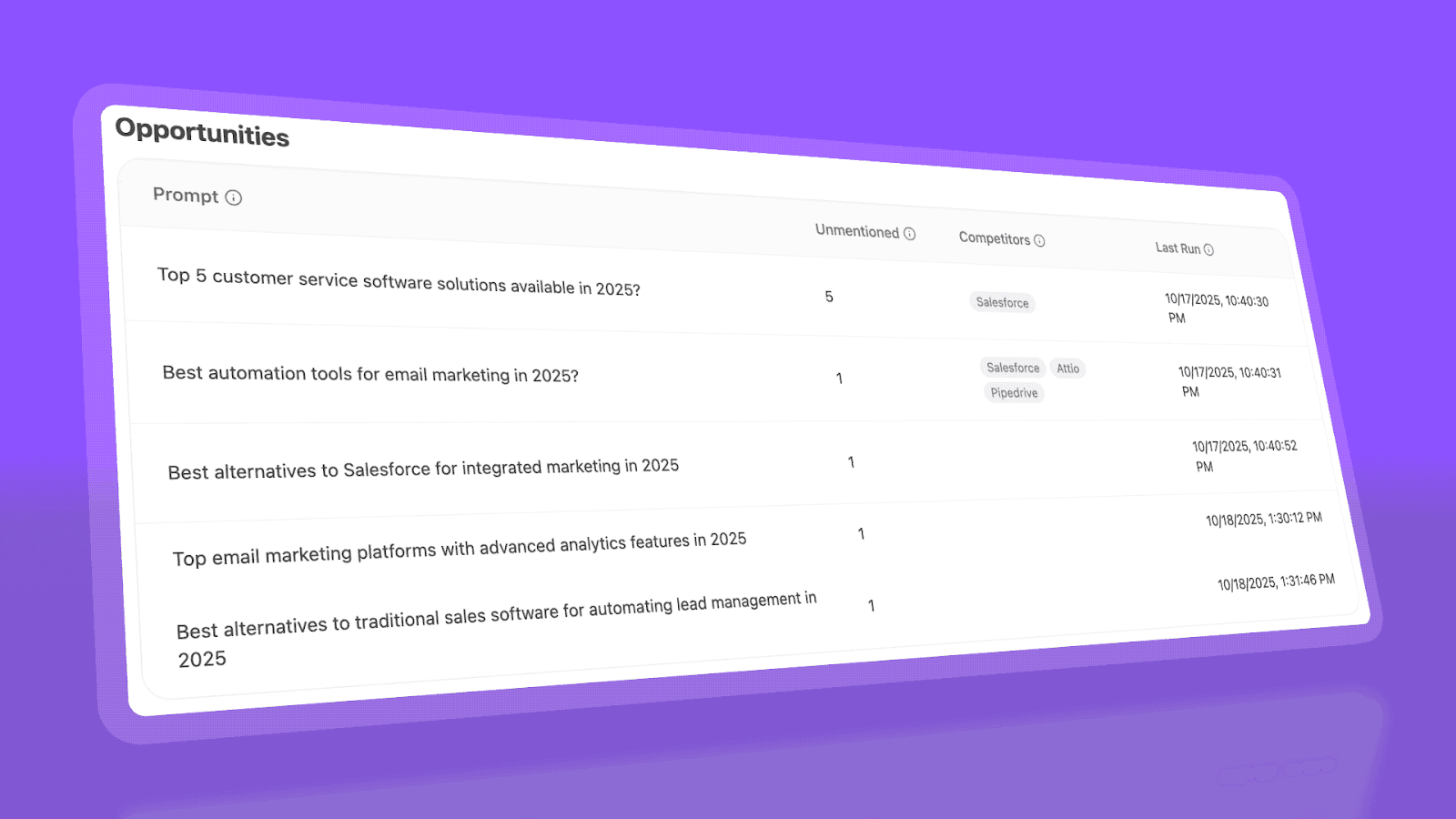

Prioritize opportunities and close competitive gaps

Analyze AI surfaces opportunities based on omissions, weak coverage, rising prompts, and unfavorable sentiment, then pairs each with recommended actions that reflect likely impact and required effort.

For instance, you can run a weekly triage that selects a small set of moves—reinforce a page that nearly wins an important prompt, publish a focused explainer to address a negative narrative, or execute a targeted citation plan for a stubborn head term.

Analyze AI for AI sentiment analysis: what matters most

|

Capability |

Why it matters for AI sentiment analysis |

|

Engine-level referral tracking |

Shows which AI models drive traffic that matters, not just where you are mentioned. |

|

Prompt-level visibility & sentiment |

Reveals which queries generate positive, neutral, or negative sentiment, enabling targeted optimization. |

|

Conversion linkage |

Ties AI referrals to page conversions and revenue to validate the impact of AI visibility efforts. |

|

Citation auditing |

Identifies which sources AI models trust, allowing targeted content and citation strategies. |

|

Competitive gap analysis |

Highlights gaps where competitors appear more often or with better sentiment. |

|

Priority actions roadmap |

Suggests high-impact moves such as improving content for specific prompts or reinforcing strong pages. |

|

Multi-engine coverage |

Tracks ChatGPT, Perplexity, Claude, Copilot, and Gemini to reveal where models differ and where to act. |

Best-fit use cases

-

Agencies and marketing teams needing proof of ROI for AI visibility efforts

-

Teams prioritizing prompt-level testing and optimization instead of vanity metrics

-

Brands that want to link content and pipeline through AI referral data

-

Competitive teams looking to close sentiment or citation gaps in AI answers

Analyze AI bridges the gap between AI visibility and tangible business outcomes by linking engine referrals, prompt performance, and real conversions. Its focus on prompt-level sentiment and revenue impact makes it ideal for teams that want to prove that AI visibility is worth their investment.

Brandwatch: best AI sentiment analysis tool for global social listening

Key Brandwatch standout features

-

Huge real-time dataset from social, forums, blogs, reviews, and news

-

AI sentiment scoring that handles slang, emotion, and basic nuance

-

Trend tracking with alerts for sharp mood spikes

-

Audience filters that show who drives each sentiment shift

-

Integrations that push insights into CRM and marketing tools

Brandwatch gives teams a wide and deep view of how people feel about their brand across many channels and many regions. Its dataset covers long history and current talk, which helps teams track slow mood shifts as well as sudden changes that may later show in AI answers. This broad context matters because AI models learn from large pools of public text, and a tool that mirrors this pool creates a strong signal for teams who want to watch how sentiment grows or fades over time.

Once data is collected, Brandwatch offers rich ways to read the story inside it. You can slice mood by market, theme, or audience, then link those shifts to real actions like product changes, campaigns, or support issues. This link helps teams see how public feeling moves and how that feeling may shape summaries produced by AI systems. The dashboards support this work with clear charts that show when mood changes and what type of talk drives the change.

Yet this depth brings weight. Many users say the setup takes time, and that the platform needs steady use before it feels natural. Smaller teams may struggle with the price or with the learning curve, even though the insight is strong. Workflows can also feel heavy when you run broad searches or long time spans.

Some users also point out limits in how the AI reads tricky tone like sarcasm or mixed mood in one post. That is normal for sentiment tools, yet it means teams should still apply human judgment on edge cases. Slower load times on very large queries also appear in reviews, which can slow teams during fast analysis moments. These weaknesses do not break the platform, but they shape how teams should plan their work with it.

Brandwatch for AI sentiment analysis: what matters most

|

Capability |

Why it matters for AI sentiment analysis |

|

Wide data coverage |

A large dataset mirrors the kind of text that influences AI answers, giving teams early signals. |

|

Long historical archive |

Past mood patterns help teams model how sentiment trends may push AI summaries in new directions. |

|

Emotion and nuance detection |

Labels that show tone shifts help teams predict changes that may shape AI brand mentions. |

|

Audience and topic filters |

Filters show which groups cause mood spikes that might appear later in AI-generated responses. |

|

Trend and spike alerts |

Alerts help teams act fast before negative talk grows strong enough to affect AI models. |

|

Clear dashboards |

Easy views help non-technical teams use sentiment in AI visibility work. |

|

Links let teams push sentiment insight into campaigns that aim to improve AI search presence. |

|

|

Custom queries and themes |

Custom setup allows tracking of brand, product, or issue themes that matter in AI answers. |

Best-fit use cases

-

Large brands tracking global mood across many languages

-

PR teams watching early risk signals to protect AI search presence

-

Insights teams that link sentiment trends with AI visibility tests

-

Agencies that run brand sentiment projects for many clients

Brandwatch gives teams a wide, detailed view of public mood that matches the scale of the text that shapes AI answers. It suits brands that need strong signals to guide AI search work and can support the setup this tool requires.

Talkwalker: best AI sentiment analysis tool for nuanced emotion and risk detection

Key Talkwalker standout features

-

AI-driven sentiment scoring that detects sarcasm, slang, and tone shifts

-

Real-time tracking of conversations across social, blogs, forums, and news

-

Trend, crisis, and brand-risk alerts that surface fast sentiment spikes

-

Multilingual support with advanced models for 180+ languages

-

Dashboards and alerts that support custom risk and trend workflows

Talkwalker gives teams a broad and detailed view of global talk while adding layers of emotion and nuance that basic sentiment tools miss. Its models highlight sarcasm, snark, and sharp tone changes, which allows teams to see early mood shifts that may later influence how AI systems frame a brand. This extra depth matters because AI engines often blend many voices into one summary, and early detection gives teams more space to respond before that summary takes hold.

Beyond raw detection, the tool connects each mention to a larger pattern by tagging tone, theme, and implied intent. With data from more than 150 million sources and strong language coverage, teams gain a view that spans markets and cultures without losing signal quality. Filters let you track mood in one region or around one theme, so you can see which groups change tone first and how fast that change travels. That clarity helps teams steer narrative work before those shifts show up inside AI answers.

Yet this power introduces extra steps for setup and training. Many users say the platform demands time to learn, and that custom dashboards, filters, and alert rules need care before they start producing clean results. Cost also appears as a point of friction, especially for smaller groups that do not need deep nuance or broad reach. These factors make it better suited to teams with enough time and structure to use the tool well.

Another point raised in reviews concerns the limits of nuance detection. Even strong AI models struggle with sarcasm layered inside mixed tone or coded language. Some alerts may signal risk that later proves mild, while other posts may need human review to understand the real sentiment. This means the tool works best as a guide that surfaces patterns, not as a full replacement for human reading on sensitive topics.

Talkwalker for AI sentiment analysis: what matters most

|

Capability |

Why it matters for AI sentiment analysis |

|

Sarcasm and nuance detection |

Helps teams spot risky mood early and act before narrative hardens in AI responses. |

|

Real-time trend & risk alerts |

Signals early spikes so teams can respond before models pick up negative trends in public discourse. |

|

Broad and multilingual coverage |

Reflects the global data that trains or informs AI models, improving signal quality for global brands. |

|

Segmentation & filters |

Supports precise tracking of audience groups whose mood swings later show up in AI summaries. |

|

Custom dashboards & alerts |

Converts sentiment signals into workflows teams can use in content, PR, and AI strategy. |

|

Seamless integrations |

Lets insight flow into CRMs, BI tools, and campaign platforms. |

|

Large data pool (150M+ sources) |

Captures signals across major social, blog, forum, and news networks before AI systems amplify opinions. |

Best-fit use cases

-

Communication teams tracking mood shifts before they shape AI outputs

-

Risk and crisis teams that need early warning on sharp negative spikes

-

Insights teams linking sentiment trends to tests on AI search visibility

-

Agencies running brand and sentiment work for many clients

Talkwalker stands out when you need sharp visibility into emotional tone and early risk signals. Its wide reach and strong nuance detection help teams guide the narrative before it becomes part of AI’s memory.

Sprout Social: best AI sentiment analysis tool for campaign-linked social monitoring

Key Sprout Social standout features

-

Sentiment widget that tracks positive, neutral, and negative mentions over time

-

AI models that read complex phrases and emojis to reveal mood with more accuracy

-

Real-time dashboards for trends, share of voice, and campaign impact

-

Full workflow in one place: publishing, inbox, care, reporting, and listening

-

Multilingual support with manual sentiment fixes to improve accuracy

Sprout Social brings sentiment and listening into the same space where teams already manage posts, replies, and customer care. This setup lets social teams watch mood shifts in real time without switching tools, which helps them respond faster and with more context. Its AI reads tone in short posts, emojis, and casual language, which fits the fast style of social talk. You get a clear sense of how people feel and how that feeling moves as campaigns launch, trends rise, or questions appear in the inbox.

Because the platform unifies publishing, care, analytics, and listening, it supports tight feedback loops. When sentiment drops, teams can adjust posts or support replies right away, then see the effect inside the same dashboard. This helps brands test ideas, refine messages, and keep a close link between content and public mood. That loop is useful when you want to shape how your brand appears in AI answers because every shift in tone on social can influence long-term perception.

Even with these benefits, there are some limits. Listening is a paid add-on, and the overall cost grows as you add more seats or advanced features. Smaller teams may find the pricing tough, especially when they only need light listening or simple sentiment tracking. The tool also focuses more on social data rather than full internet coverage, so you do not get the same reach across forums, news sites, and large corpora as you would with enterprise-first tools.

Another point to note is the setup needed for good results. Teams must define topics and filters with care, which takes time and practice. If your queries are too broad, the results can feel noisy or unfocused. Some users mention that the tool handles most sentiment well but still needs manual checks on tricky tone or mixed emotion. These points do not reduce its value for social teams, yet they shape how much effort you should expect when building your listening program.

Sprout Social for AI sentiment analysis: what matters most

|

Capability |

Why it matters for AI sentiment analysis |

|

Social sentiment tracking |

Shows how public mood shifts as content changes, which guides AI visibility work. |

|

Emoji and nuance reading |

Captures tone in short posts that shape early signals in AI models. |

|

Real-time dashboards |

Helps teams act before negative talk spreads or becomes part of larger narratives. |

|

Unified workflow |

Combines publishing, replies, and sentiment in one space for faster response loops. |

|

Manual sentiment fixes |

Lets teams correct edge cases so data stays clean for analysis. |

|

Multilingual support |

Tracks global reaction when brands run international campaigns. |

|

Campaign impact tracking |

Shows which moves improve mood and which create risk that AI may reflect later. |

Best-fit use cases

-

Social teams that need to watch mood while they post and reply

-

Brands running campaigns linked to AI visibility or brand trust

-

Comms teams that want fast alerts and simple action steps

-

Mid-size teams that prefer one tool for publishing, care, and sentiment

Sprout Social works best when sentiment insight must live close to daily social work. Its real-time feedback and clear dashboards help teams guide public mood before those signals shape how AI engines describe the brand.

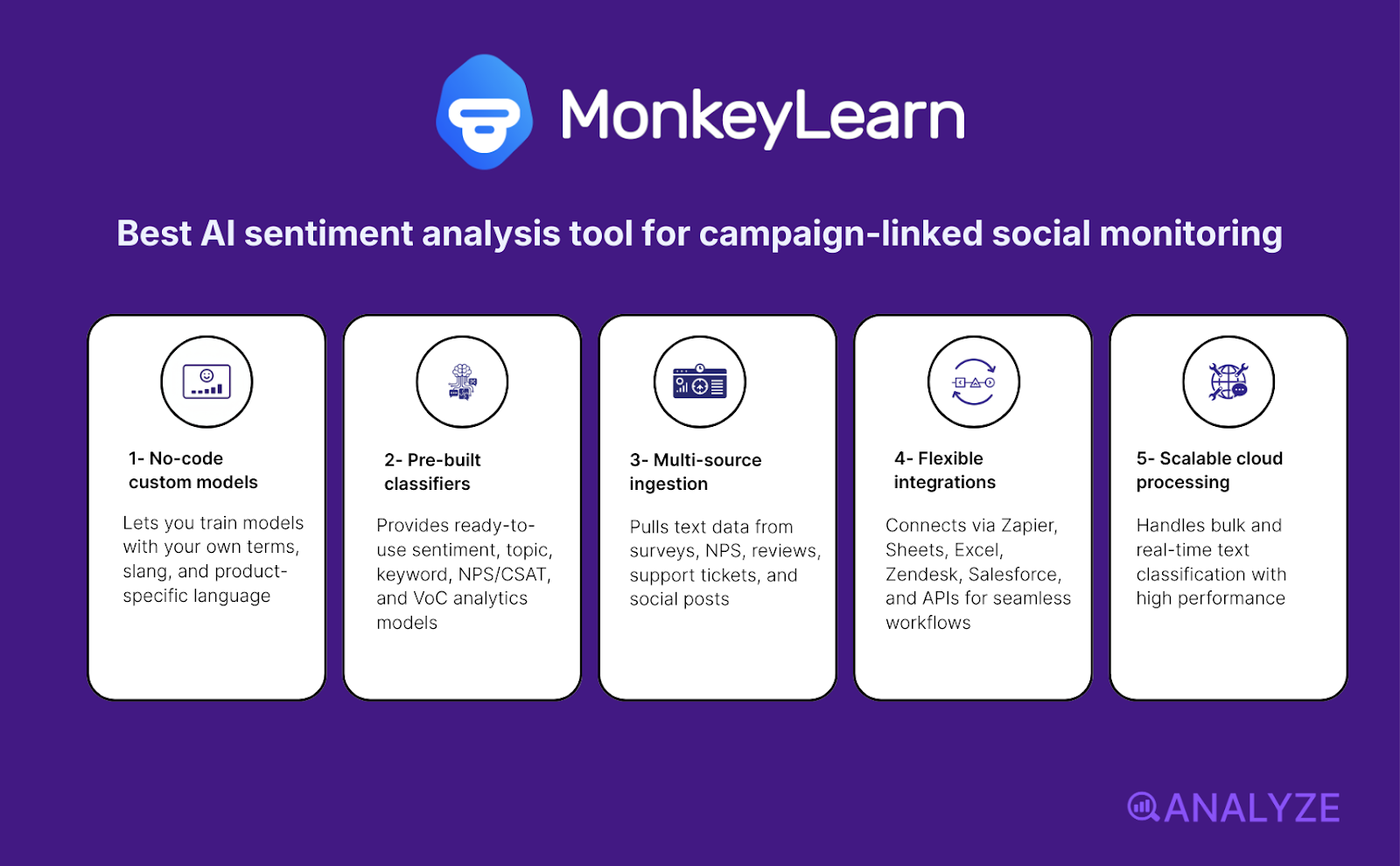

MonkeyLearn: best AI sentiment analysis tool for custom, no-code text modeling

Key MonkeyLearn standout features

-

No-code models you can train with your own terms, slang, and product language

-

Pre-built classifiers for sentiment, topics, keywords, NPS/CSAT, and VoC analytics

-

Multi-source ingestion across surveys, NPS, reviews, tickets, and social posts

-

Integrations through Zapier, Sheets, Excel, Zendesk, Salesforce, and APIs

-

Scalable cloud processing for bulk and real-time text classification

MonkeyLearn gives teams a simple way to turn raw text into structured insight without needing data science skills. It supports both pre-built models and custom ones, which means you can shape sentiment and topic detection around your brand language. This helps teams catch patterns in feedback, tickets, reviews, and social talk with far more accuracy than generic APIs. Its no-code setup lowers the barrier for marketing, CX, and product teams that need to learn from text fast and adjust content or messaging based on what people say.

Because the tool accepts input from many sources—surveys, NPS, reviews, tickets, and social feeds—you can centralize feedback that normally sits in fragments. Dashboards show trends, themes, and shifts in sentiment, which helps teams find drivers behind praise or frustration. Integrations through Zapier and APIs make it easy to push classified text into BI tools or CRMs, so insight becomes part of your daily decision-making rather than a separate task. This makes MonkeyLearn flexible for experiments, internal tools, and ongoing analysis.

On the other hand, MonkeyLearn sits closer to a focused text-analysis engine than a full workflow platform. Some newer automation tools cover entire processes, while MonkeyLearn centers on modeling and classification. This narrower focus works well when you want control, but teams looking for “all-in-one automation” may need extra tools to support their full workflow. Pricing also deserves careful planning, since costs rise as classification volume increases. Heavy usage or multi-team deployments can push you into higher tiers quickly.

Another factor to consider is the time needed to reach strong accuracy. Custom models improve performance, yet they require labeled examples and some iteration. Teams without enough data or capacity may prefer fully pre-trained sentiment APIs, even if they lose accuracy for domain-specific language. MonkeyLearn performs well once tuned, but tuning requires effort. For teams that value speed and customization, the trade-off is often worth it.

MonkeyLearn for AI sentiment analysis: what matters most

|

Capability |

Why it matters for AI sentiment analysis |

|

Custom sentiment models |

Lets teams track tone related to AI answers, complaints, or praise with brand-specific language. |

|

Topic and intent classification |

Helps group issues like “hallucination,” “pricing pushback,” or “feature gaps” for deeper AI insight. |

|

Multi-source ingestion |

Combines chat logs, prompts, reviews, and Reddit talk that influence how users judge AI quality. |

|

Dashboards for trend tracking |

Shows how opinion moves as you adjust content, schema, or product messaging for AI visibility. |

|

Integrations and APIs |

Pushes labeled sentiment into BI tools, CRMs, or internal AI dashboards for team-wide use. |

|

Scalable processing |

Handles large batches of AI outputs or user queries without slowing workflows. |

|

No-code training |

Lets non-technical teams adapt models as products and AI narratives evolve. |

Best-fit use cases

-

Product or CX teams that need custom labels for AI-related feedback

-

Marketing teams tracking how users respond to AI answers or features

-

Support teams analyzing large volumes of tickets tied to AI behavior

-

Organizations building internal AI visibility dashboards with custom sentiment

MonkeyLearn shines when you need flexible, custom sentiment models built around your own language. Its no-code approach helps teams shape and track AI-related conversation with far more detail than generic tools allow.

Tie AI visibility toqualified demand.

Measure the prompts and engines that drive real traffic, conversions, and revenue.

Similar Content You Might Want To Read

Discover more insights and perspectives on related topics

8 Brand Tracking Software Tools You Can’t Afford to Ignore

8 AirOps Alternatives for Teams That Need AI Search Visibility Without the Complexity

7 Free and Beginner-Friendly Small Business SEO Tools

23 Best Content Creation Tools for Creators

16 Best Competitor Monitoring Tools & How to Use Them